A team from the Biomedical Data Science Laboratory (BDSLAB), part of the ITACA Institute at the Universitat Politècnica de València (UPV), has led an international study laying the foundations for the development of safe, reliable and ethically responsible artificial intelligence (AI) systems in healthcare.

Published in the scientific journal Artificial Intelligence in Medicine, the work proposes a technical framework made up of 14 software design requirements aimed at reducing the risks that AI-based clinical solutions may pose to patients.

A practical framework to address real risks

The research, led by Juan Miguel García-Gómez, Vicent Blanes and Ascensión Doñate, all from BDSLAB-ITACA at UPV, is based on an increasingly evident reality: the impact of AI on healthcare, especially in areas such as diagnosis, monitoring, screening and, more broadly, clinical decision-making support. The implementation of AI raises serious ethical, technical and safety challenges.

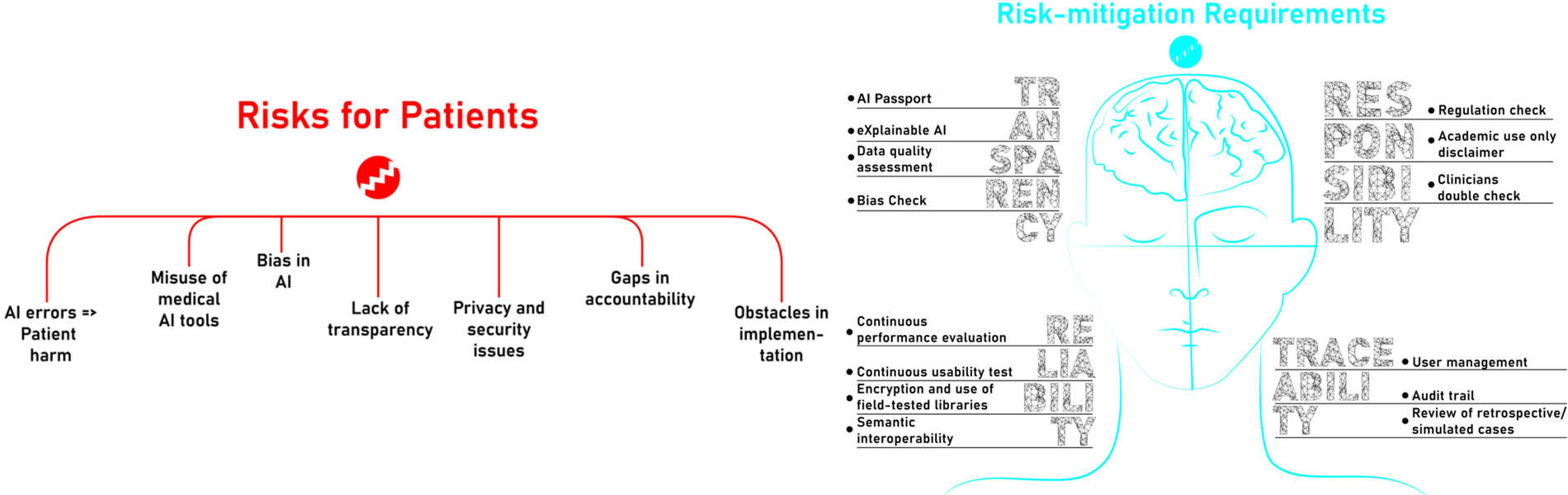

“The European Parliament’s Research Service has identified seven major risks that AI may pose for patients: prediction errors, malpractice, bias, lack of transparency, lack of privacy, absence of accountability and difficulties in implementation,” explains Juan Miguel García-Gómez, principal investigator at BDSLAB and lead author of the study.

To address these challenges, the BDSLAB researchers have developed a set of technical requirements that can be implemented in practice. The proposal complements the ethical guidelines promoted by the European Commission and is structured around four key pillars: reliability, transparency, traceability and accountability.

“Our aim is to mitigate the risk of potential damage that AI-based medical software may cause, by integrating engineering requirements that guarantee safer and more responsible systems,” stresses Professor García-Gómez.

Sector validation: consensus among professionals

To validate the usefulness and applicability of the proposed framework, the BDSLAB team, in collaboration with experts from the Technical Committee on AI at the Spanish Society of Health Informatics, conducted a survey between March and June 2024 involving 216 professionals from the medical TIC sector — including clinicians and engineers who could be both managers and end users of AI.

The results show broad consensus on the relevance of the proposed requirements. The study also reveals differing perspectives: clinical profiles prioritise aspects related to continuous evaluation, data quality, usability and safety. However the technical profiles value on the implementation of regulatory aspects of AI.

“This proposal fills the gap between general ethical principles and their concrete application in the design and development of AI medical software. It represents a practical roadmap, aligned with the new European regulatory requirements and with the need to protect patients’ rights,” adds Ascensión Doñate.

A framework aligned with the new European AI regulation

The study gains particular significance following the recent approval of the European Artificial Intelligence Regulation (AI Act, 2024/1869), which classifies AI systems in medicine as high-risk. This means such solutions must simultaneously comply with the Medical Devices Regulation (MDR 2017/745) and with requirements for traceability, human oversight and algorithm transparency.

In this context, the BDSLAB researchers believe that the proposed framework can serve as a guide for software developers, evaluators and regulatory authorities who wish to ensure the safety, fairness and reliability of AI-based clinical tools.

“Although international ethical frameworks have generally addressed the challenges posed by AI in medicine, practical recommendations aimed at minimising patient harm remain scarce. This is the first work that translates those principles into concrete, applicable technical requirements, designed to ensure the development of AI-based medical tools that are transparent, traceable and patient-centred,” concludes Vicent Blanes..

Reference: Juan M. Garcia-Gomez, Vicent Blanes-Selva, Celia Alvarez Romero, José Carlos de Bartolomé Cenzano, Felipe Pereira Mesquita, Alejandro Pazos, Ascensión Doñate-Martínez, Mitigating patient harm risks: A proposal of requirements for AI in healthcare, Artificial Intelligence in Medicine, Volume 167, 2025, 103168, ISSN 0933-3657, https://doi.org/10.1016/j.artmed.2025.103168.